In this post we see the actual results of different G-Buffer formats.

For this stage, I created two different classes to hold the shader programs, the hlslDeferredObjectPass and the hlslDeferredLightPass. The Object pass is basically an extension to the xlslModularProgram, and it will be made to a plugin in the near future, I just wanted to keep things nice and separate for the early prototyping. Its behavior is practically identical to the xlslModularProgram, plus it manages the RenderTargets.

The G-Buffer format for each render target is passed as a parameters on shader creation, making it quite easy to set up for different buffer formats.

To make a long story short, the shaders I have created expect the following render targets:

1) R: Depth (hardware Depth stencil buffer, can just as easily be another buffer)

2) RGB: Normal

3) RGB: Diffuse A: Gaussian shininess(*)

4) RGB: Specular A: Phong shininess(*)

Obviously, of the two specular gloss (shininess) parameters, only one is needed at a time. Because I am switching specular models quite often, and since I have three free spots in my buffer anyway, I decided to include both to keep my specular calculations pluggable. Obviously, one would only need one of the two in a production environment, depending on his specular model. Also, since I plan on using a normalized format, the phong exponent needs to be tranlated to the 0-1 range. I simply divided it by 256 and clamped it to 0-1, as I believe the value 256 for the phong exponent is quite enough, as is a resolution of 1. If I have reason to believe otherwise, I might always switch to a different scheme.

After testing and retesting several depth encoding models, I settled in Crytek's suggested solution : Encoding depth information as Depth/FarClipZ, and rebuilding the information in the lighting pass by using the viewing frustum corners to find the view direction ray. A detailed explanation along with several other depth-encoding techniques can be found in this excellent read.

The general-general idea is that you can get a ray from the camera to the pixel-of-interest by interpolating screen position with the far view frustum corners, and with this and the depth you can reconstruct the position very, very efficiently (just a multiplication in the pixel shader).

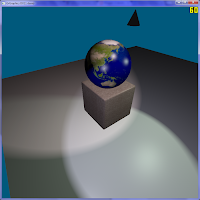

|

| Forward |

|

| Deferred |

I have only written the deferred shader for the Simple Diffuse Textured version for now.

Anything that has to do with materials and geometry, up-to and including Displacement Mapping, is bound to be trivial, as it will basically be a copy of the corresponding forward shader, minus the lighting calculations, plus the render target stores.

Shadow maps integrate normally, but not necessarily trivially, but to be honest I feel that some of the algorithmic complexity that we have reduced by deferred rendering might be added back (as they work on rendering lights x objects), and it will need quite some optimization to work correctly.

Shadow maps integrate normally, but not necessarily trivially, but to be honest I feel that some of the algorithmic complexity that we have reduced by deferred rendering might be added back (as they work on rendering lights x objects), and it will need quite some optimization to work correctly.

For now, the spotlight is still on the G-Buffers.

As noted, the buffers are simply configurable on the shader definition:

...

config.vertexUv_AttributeSemantic = "IA_INPUT_TEXUV";

DXGI_FORMAT fmt[3];

fmt[0] = DXGI_FORMAT::DXGI_FORMAT_R8G8B8A8_SNORM; //XYZ: NORMAL W: - USED FLAG

fmt[1] = DXGI_FORMAT::DXGI_FORMAT_R8G8B8A8_UNORM; //XYZ: DIFFUSE W: SPEC_FACTOR PHONG/256

fmt[2] = DXGI_FORMAT::DXGI_FORMAT_R8G8B8A8_UNORM; //XYZ: SPECULAR W: SPEC_FACTOR GAUSSIAN

//Could do that for a non-hardware Depth buffer

//fmt[3] = DXGI_FORMAT::DXGI_FORMAT_R32_FLOAT;

//fmt[3] = DXGI_FORMAT::DXGI_FORMAT_R32_FLOAT;

shader->init(config);

...

The shader listings are quite involved at this point, so let's focus on a few pieces of interest.

Writing on the G-Buffers in the pixel shader of first pass (object rendering), is quite easy. Only the depth needs special mention:

struct PSout {

float4 eye_normal: SV_Target0;

float4 material_diffuse_alpha: SV_Target1;

float4 material_specular_shininess: SV_Target2;

float depth: SV_Depth;

};

PSout main(PSin inp){

PSout outp;

outp.depth = -(inp.eye_position.z/projectionDetails.zFar);

outp.eye_normal.xyz = normalize(inp.eye_normal);

outp.eye_normal.w = material.gaussian_gloss;

outp.material_diffuse_alpha.xyz = diffuseTexture.Sample(textureSampler, inp.texUv).xyz;

outp.material_diffuse_alpha.w = material.alpha;

outp.material_specular_shininess.xyz = material.specular.xyz;

outp.material_specular_shininess.w = saturate(material.phong_gloss/256);

return outp;

}

As mentioned, we are using a special kind of depth: This hand - normalized depth encodes the distance of the point along the vector from the camera through the pixel ending on far-clip plane.

In any case, if you use the hardware buffer keep in mind that you must output a normalized depth, something that you wouldn't need to do for a dedicated render target texture for depth.

And, an example of a fetch in the pixel shader of the Lighting pass:

float4 sample_normal_used = normalTexture.Sample(textureSampler, inp.texUv);

//Discard the pixel if it has not been written to - something like a custom

//stencil test before I enable a real stencil test - which I will

//This is done mainly to maintain the background color of the screen during

//the first, unblended pass.

//stencil test before I enable a real stencil test - which I will

//This is done mainly to maintain the background color of the screen during

//the first, unblended pass.

if (sample_normal_used.w == 0) { discard; }

//IMPORTANT - Keep in mind, the normal may end up having a length > 1 due

//to the 8 bit snorm storage, which can cause problems later if some

//specific calculation absolutely requires it to be <=1, notably the reverse

//trigonometric functions acos and asin used for Gaussian specular. In these

//cases before guarding it, I got a black artifact in places where the

//specular highlight would be brightest: The normal and the halfangle were

//parallel, but the normal had a length of slightly >1, making acos undefined

float3 eye_normal = sample_normal_used.xyz;

//to the 8 bit snorm storage, which can cause problems later if some

//specific calculation absolutely requires it to be <=1, notably the reverse

//trigonometric functions acos and asin used for Gaussian specular. In these

//cases before guarding it, I got a black artifact in places where the

//specular highlight would be brightest: The normal and the halfangle were

//parallel, but the normal had a length of slightly >1, making acos undefined

float3 eye_normal = sample_normal_used.xyz;

If the normal has not been written to (its A component is zero), discard the pixel. This is something that you normally would use the stencil buffer for, and you should use the stencil buffer for it and not the pixel shader, this is just the first prototyping attempt, I am only doing it temporarily as a way to maintain background color in unwritten pixels.

Reference is 2658 FPS by the forward renderer.

We will only be using 3 lights for the test, as we are not testing the forward vs. deferred here (as it is not correctly optimized for lights yet), but rather the performance and visual precision of the different buffer formats.

I will run some tests with multiple tiny lights later.

|

| 128-bit-width render targets: 480 FPS |

The first deferred shading test was executed with the horrendous 128x4 = 512 bit-per-texel buffer

Also, for the purpose of simplicity of prototyping, for the moment all lights are rendered as full-screen quads and NOT the correct, recommended, bounding volumes or bounding quads.

Buffers:

1) DXGI_FORMAT_R32G32B32A32_FLOAT,

2) DXGI_FORMAT_R32G32B32A32_FLOAT,

3) DXGI_FORMAT_R32G32B32A32_FLOAT,

4) DXGI_FORMAT_R32G32B32A32_FLOAT

Thankfully, it's (seemingly) pixel perfect!

The FPS is 480, quite bad I'd say...

|

| 64 bit width per render target: 1028 fps |

The second test follows the same philosophy, but cuts the precision for everything but depth in half. This gives us a 64x4 = 256 bit-per-texel buffer

Buffers:

1) DXGI_FORMAT_R32G32_FLOAT,

2) DXGI_FORMAT_R16G16B16A16_FLOAT,

3) DXGI_FORMAT_R32G16B16A16_FLOAT,

4) DXGI_FORMAT_R32G16B16A16_FLOAT

The screenshots don't look any less for wear, it is still as good as the R32 version.

The FPS more than doubled to 1028 though, showing us that we are (predictably) 100% memory- bandwidth bound here.

|

| 32 bit width render targets: 1580 fps |

Position encoded as full precision depth, plus normalized normals and albedos encoded in 8bpp per component. That is 32x4 = 128 bits per texel.

Buffers:

1) DXGI_FORMAT_R32_FLOAT,

2) DXGI_FORMAT_R8G8B8A8_SNORM,

3) DXGI_FORMAT_R8G8B8A8_UNORM,

4) DXGI_FORMAT_R8G8B8A8_UNORM

The fps gained another 50%, or triples compared to the initial deferred example.

Understandably all of these are quite slower than the forward renderer. Two factors play here.

One, we are only using three lights. Remember that the deferred renderer really shines (pun intended) with multiple small lights.

Two, each light still causes a full-screen execution of the pixel shader - light bounding volumes have not yet been implemented.

Further optimizations in the next post.

No comments:

Post a Comment